The bot at your side

Every year, thousands of people in Germany experience discrimination. There are too few counseling centers, not all of them find the support and help they need. Lawyer Said Haider and his team are working on an intelligent chatbot that supports victims of discrimination, online and 24/7. Here are the most important facts – and an interactive video chat with the founder.

The situation

In 2020, the German Federal Anti-Discrimination Agency reported a 78% increase in counseling requests compared to the previous year. Around one third of reported cases involved discrimination on the basis of ethnic or racial origin – making it one of the most frequently cited forms of discrimination. In their analysis, the authors of the report “Discrimination in Germany” emphasize that the figures are not representative and that there is probably a high degree of "underreporting": many cases go unrecorded because reporting the incidents causes anxiety and stress.

According to the 2022 "National Discrimination and Racism Monitor", almost two-thirds of those surveyed in Germany said they had either been racially discriminated against themselves or had been ear-witnesses or eyewitnesses to such incidents. The anti-discrimination agency also registers insult and humiliation on the basis of gender, religion, disability, age, sexual identity and ideology. "We are observing an increased social awareness of discrimination," states the agency’s Annual Report. "More and more people are actively seeking qualified advice." But it also says: "Due to high demand, we have had to temporarily discontinue telephone counseling – those seeking advice can currently only contact us via our counseling form or in writing."

An interactive interview with Said Haider

The chatbot's mission

"To be the 911 for discrimination advice on the web," a contact that everyone knows and that can be reached at any time – this is the ambitious goal of the anti-discrimination chatbot Yana which is being developed by lawyer Said Haider and his team, since 2021 with funding from the German Federal Ministry for Economic Affairs and Climate Protection and the Robert Bosch Stiftung. Yana stands for "You are not alone" – and the name says it all. According to Haider, the big advantage of a chatbot is that "the program is available 24/7 and speaks many languages; it doesn't have to take breaks, which means you don’t have to wait in line."

So far, such automated communication programs have mostly been used in customer service. Said Haider expects "that the technology will increasingly be used in the social sector as well." Even today app stores offer "social bots" or "AI Companions" that promise to help against loneliness or depression, or bots designed to diagnose and prevent dementia.

Currently, the team is conducting various studies on the web to find out which information is most helpful to those affected by discrimination in which situations (all staff members have experienced discrimination themselves). This information, research and data are fed into the software. "A bot is a good guide," says Said Haider, "but it also needs material to refer to."

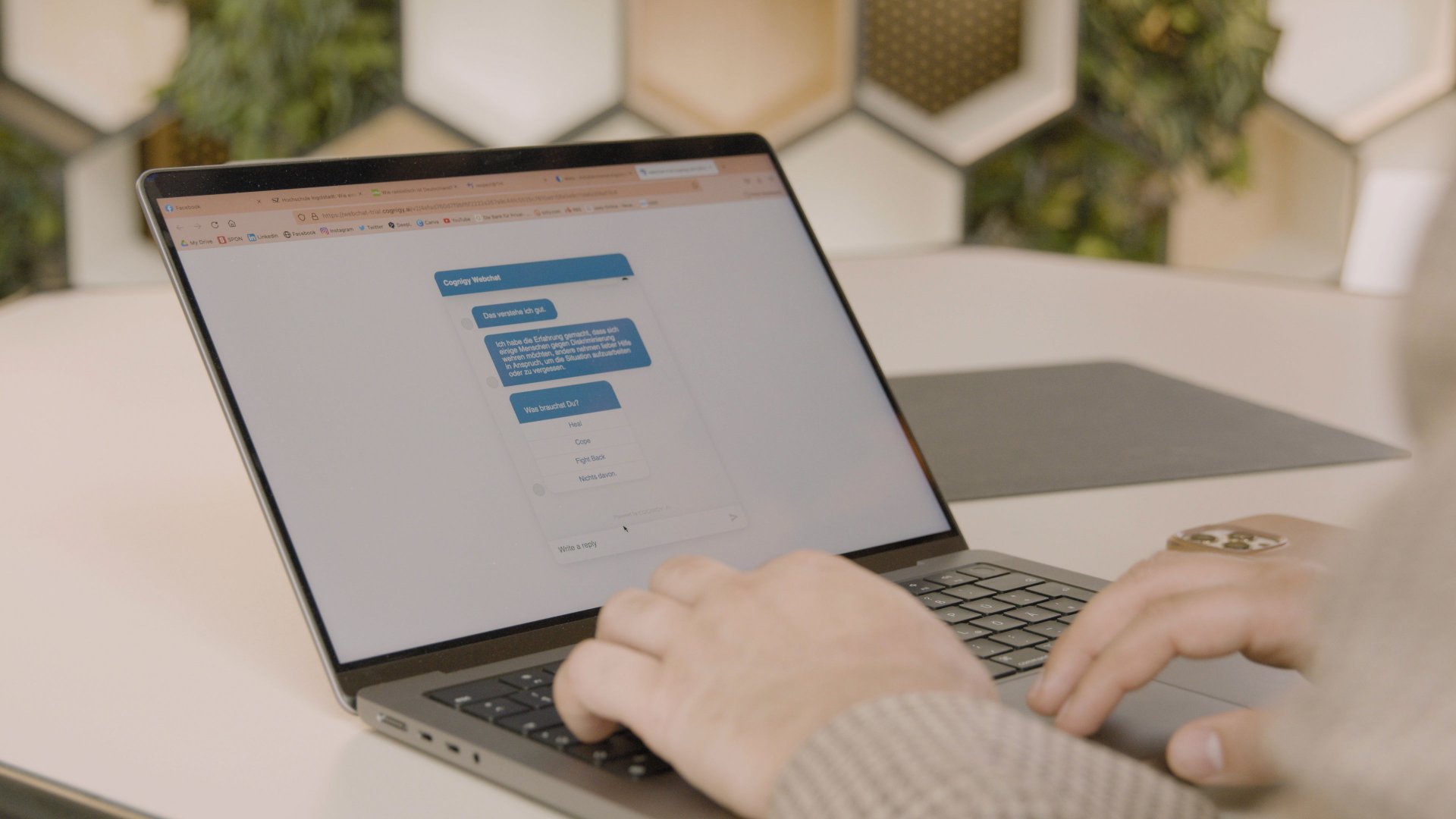

The tool

"Do you want to tell me your first name?" Yana doesn't barge in, but first tries to establish a relationship. There is no machine calculation behind this, but rather the hunch of a human designer that the probability of an interaction with a bot increases when personal information is requested selectively.

According to Said Haider, the anti-discrimination chatbot is not intended to provide therapeutic advice to those affected, but to show them appropriate courses of action based on the information they provide. What do I need to know? Where is the nearest counseling center – and how can I get an appointment there?

The chatbot is based on a so-called conversational AI, which is being developed with the help of the open source platform Rasa. The program performs three important steps:

- The raw text from user messages is transformed into structured data to analyze the likely intent of the user.

- Dialogue management: Based on the user messages and the contexts of the conversations, the machine learning-based program decides what the bot should do or suggest next. It can provide an initial assessment of whether the reported incident is legally relevant or find the nearest counseling center.

- The chat as a hub: The software enables chat users to be automatically referred to further services via various messaging channels or by connecting to databases, APIs and other data sources. For example, from 2023 it should be possible to make an anonymous telephone appointment with a counseling center via the chatbot.

Artificial intelligence is a self-learning system that needs data input in order to learn. This is another reason why the chatbot makers' exchange with the community is essential, says Haider – not only to find out what people really need, but also to train the artificial intelligence. In other words, the more people interact with the bot, the more human it becomes – and the more people it can help.